Unveiling the Emerging LLM App Stack: A New Horizon in Cybersecurity

From Contextual Data to App Hosting: Understanding the Intricate Layers of the LLM App Stack

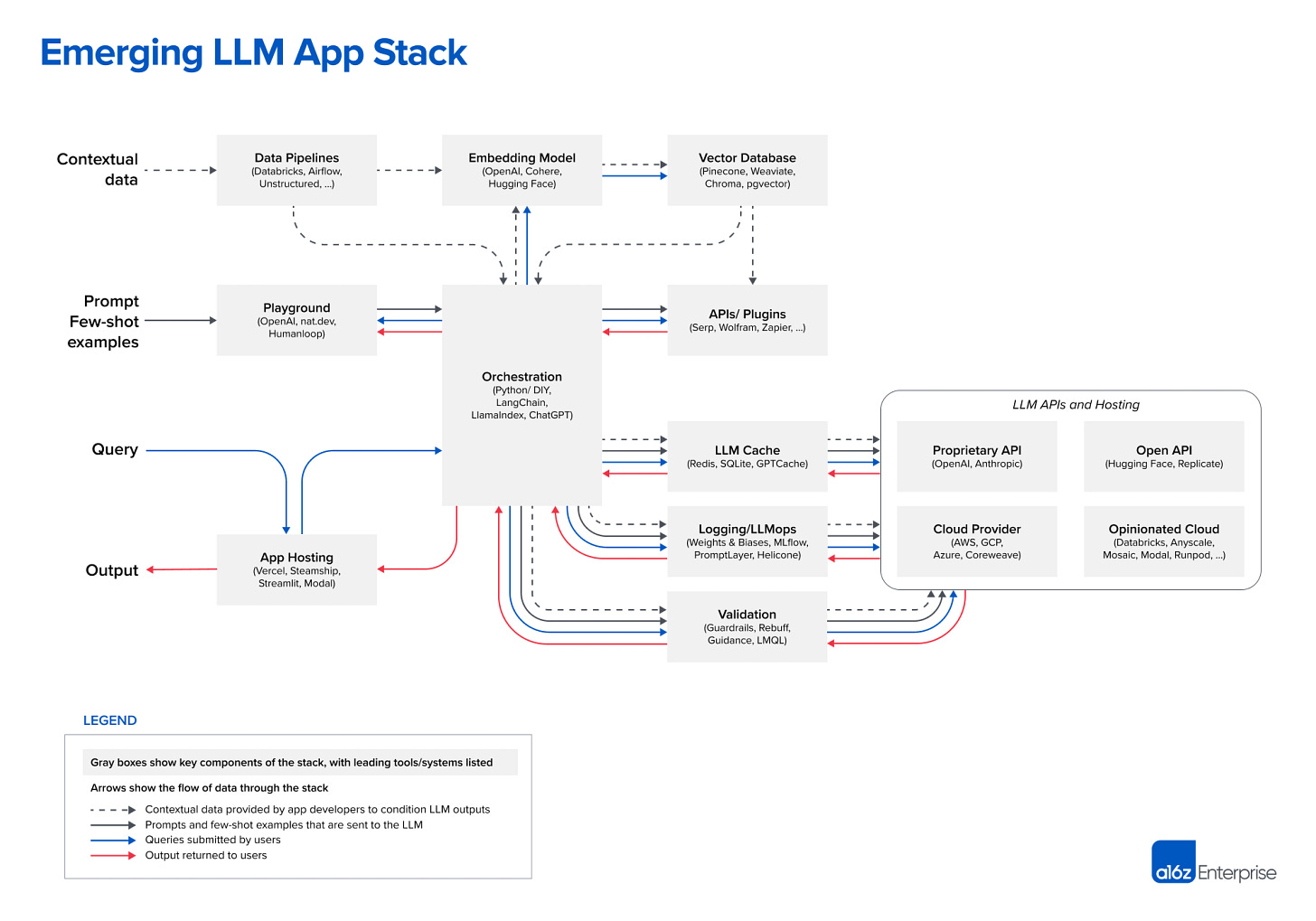

Image Reference: https://a16z.com/2023/06/20/emerging-architectures-for-llm-applications/

In the rapidly evolving world of AI and cybersecurity, Large Language Models (LLMs) present a fascinating frontier of exploration. Picture a layered cake, each tier adding flavor and complexity, working together to form a delightful whole. This is akin to the LLM App Stack - a multi-layered structure where each component plays a crucial role in ensuring the effective application of LLMs. In this article, we'll slice through each layer, gaining a comprehensive understanding of its functions, interactions, and implications for cybersecurity.

Contextual Data and Pipelines: The Foundation Layer

Every remarkable cake begins with a solid base. For the LLM App Stack, that foundation is the contextual data fed through pipelines. This data, gathered from diverse sources, is the initial input that informs the models and kickstarts the entire process.

Embedding Models and Vector Databases: The Core Layer

The embedding models form the core of our cake, analogous to the rich flavors that define it. These models transform the contextual data into mathematical representations (vectors) which can be stored and manipulated in vector databases, playing a vital role in interpreting and processing the data.

Playground and Orchestration Layer: The Creative Space

Next, we reach the playground and orchestration layer, akin to the icing and decoration of our cake, where the magic of creativity comes to life. This is where the carefully prepared data interacts with various tools and resources, managed by the orchestration layer, facilitating efficient operation of the LLM applications.

APIs, LLM Caches, and Logging/LLMOps: The Security and Monitoring Layer

Like a cake protector safeguarding our masterpiece, APIs, LLM caches, and Logging/LLMOps form the security and monitoring layer. These components ensure the safe exchange of data, speed up performance, and provide essential logging and operational functions for system oversight.

Validation Layer and App Hosting: The Presentation Layer

Finally, we have the validation layer and app hosting - the cake stand that proudly displays our creation for all to see. The validation layer ensures that all the components are working as expected, while app hosting provides a platform for users to interact with the LLM applications.

Conclusion and Looking Ahead:

Like baking a perfect cake, the LLM App Stack is a complex but exciting process. Each layer plays a unique role in shaping the overall structure, contributing to effective and secure AI applications. As we look forward, we see the continued evolution of this stack and its components, promising a future of innovative and secure AI solutions.

Summary:

In this insightful exploration, we've dissected the intricate layers of the LLM App Stack, each contributing to the broader picture of AI applications and cybersecurity. From the foundational contextual data to the final validation layer, we've journeyed through the complexities and simplicities of this evolving structure. Stay tuned for our next dive into the fascinating world of AI and cybersecurity.